library

You couldn't miss the theme of Generative Artificial Intelligence (GenAI) at the Black Hat cybersecurity conference which took place in Las Vegas back in August. The conference's opening keynote focused on the promise and perils of this emerging technology, likening it to the first release of the iPhone, which was also insecure and riddled with critical bugs.

Talk about the risks associated with this technology isn't new: GenAI and Large Language Models (LLMs) have been around since 2017. What is new, however, is the introduction of ChatGPT in November 2022, which generated widespread attention and has really spurred the security industry to focus much more on the implications of GenAI for cybersecurity.

In this blog series, we'll explore some of those implications, assess how the double-edged digital sword of GenAI could shift the playing field in favor of both defenders and attackers depending on the circumstances, and highlight where startup companies are leading the way in harnessing the technology.

New capabilities, new risks

There are several reasons GenAI is causing concern in cyber circles. First, it offers a new set of capabilities over and above previous AI technology, which was primarily used to make decisions or predictions based on analysis of data inputs. Thanks to advances in computing and algorithms, GenAI can now be applied to a new category of tasks in cyber: developing code, responding to incidents, and summarizing threats.

Transformer models, or neural networks that learn context by tracking relationships in sequential data, are a key driver of these capabilities. Together with their associated attention mechanisms, which enable the algorithms to selectively focus on certain elements of input sequences while parsing them, the models look set to change the cyber landscape in a second significant way too.

The previous generation of AI promised to automate more decision-making but delivered too many false alerts, disappointing practitioners. GenAI models trained on a large corpus of publicly available data, then fine-tuned with industry-specific data, promise to deliver outputs and predictions that are more robust and reliable.

They could also help address one of the industry's biggest headaches: the burden imposed by a talent shortage that has left many hundreds of thousands of cybersecurity roles in the United States unfilled‚ a situation which has been highlighted this year by both the U.S. Congress and the White House. Tools that leverage GenAI can help by enabling analysts to get more done in their daily workflows and by making it easier to fully automate a greater percentage of their work.

The not-so-good news is that the risks of GenAI are becoming ever clearer. Defenders fear that adversaries armed with the technology will take social engineering to the next level, using it to craft perfectly targeted phishing emails or audio that's been engineered to, for instance, replicate the specific tone and cadence of a boss's voice. They are also concerned that criminals and nation states will leverage LLMs to generate unlimited permutations of malware, undetectable by existing signature- and heuristic-detection platforms.

Working with AI copilots

These issues are accentuated by the fact that the technology is evolving fast. AI copilots, which learn from users' behavior and offer guidance and assistance with tasks, are one example of this change. Large companies such as Microsoft with Security Co-Pilot and CrowdStrike with Charlotte AI are making headlines with tools to automate incident response and quickly summarize key endpoint threats using the technology. Startups, too, are integrating GenAI into their offerings and entrepreneurs are launching new companies to leverage GenAI in the cyber domain.

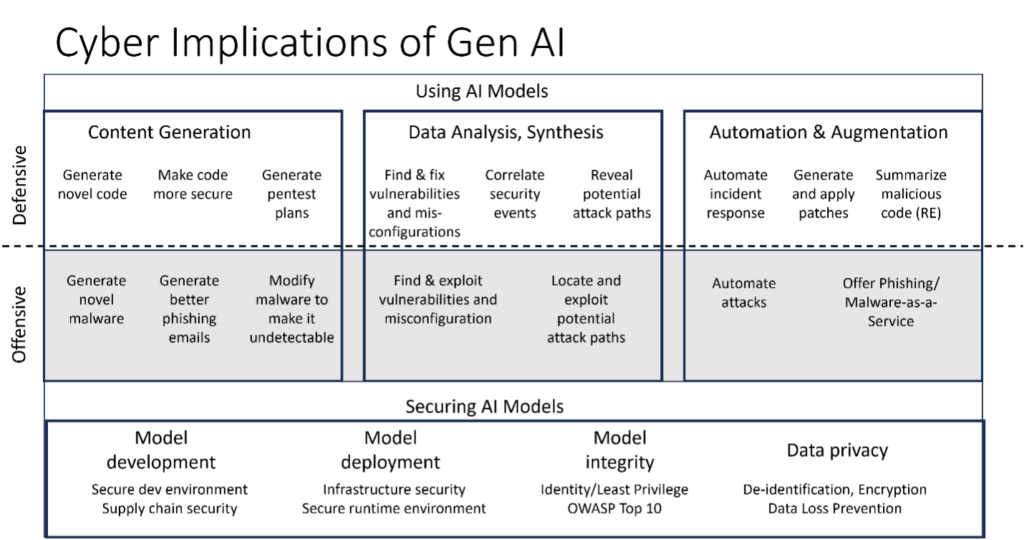

The market is morphing so quickly it's hard to see the overall picture clearly. To bring it into sharper focus, we've developed a high-level market map (shown below) that highlights two critical dimensions where we see GenAI impacting the cybersecurity ecosystem. The first of these is the use of GenAI models to power both defensive and offensive capabilities. The second focuses on how to secure the models themselves.

In this blog series we'll zero in on the first of these two dimensions. (A future one will look at threats to the way the models work, including risks such as malicious prompt injections and data poisoning. In the meantime, you can see some of our existing cyber-assurance work on AI here and here.)

Within the first dimension, we're going to focus on three main sub-categories:

- Cyber implications of content and code generation. As we noted above, LLMs can be used to create fresh content, such as novel code or phishing emails. Tools such as WormGPT can be employed by attackers to write targeted and highly convincing business email compromises, while ones such as FraudGPT can help them generate malicious code. At the same time, defenders can use LLMs to develop code that's hopefully more secure and to generate patches faster to address vulnerabilities.

- The use of GenAI in cyber data analysis and synthesis. By training on large amounts of threat intelligence, network, and endpoint data, LLMs can provide targeted summaries of relevant threats and exploitable vulnerabilities more quickly and thoroughly than existing manual processes. For example, Google's Sec-PaLM powers its Security AI Workbench and is trained on Mandiant's vast trove of threat-intelligence data.

- Augmentation and Automation of security tasks. While we don't believe humans can be taken out of the loop in incident response, GenAI can help automate more low-value tasks of analysts so they can focus on true positives and other important security work. The tech may also make it possible to investigate all alerts compared with the current widespread approach of prioritizing some alerts over others for follow-up.

In our conversations with our portfolio businesses and many other cyber companies that IQT considers for investment every year, we're learning of startups that are driving innovative new approaches with GenAI and LLMs. They include firms such as IQT portfolio company Vector35, which is using GenAI for reverse engineering of malicious code; Dropzone AI, which leverages LLMs for autonomous alert investigation; and Amplify Security, which uses LLMs to generate remediation code within a developer's native workflow.

In subsequent posts, we'll dive more deeply into the three sub-categories mentioned above, starting with GenAI-powered content and code generation and the threats and opportunities these pose in cyber. In the process, we'll start filling out our map with examples of some startups that are working on novel solutions to address the challenges—and the opportunities—these areas raise as part of a new wave of cyber innovation. The efforts of these and other young companies will help determine who comes out on top in the next iteration of the perpetual cat-versus-mouse contest in the world of cybersecurity.

This post is the first in an IQT series on the implications of Generative AI for cybersecurity. You can read our second post here.

(Is your company applying GenAI in the Cyber domain? If so, please contact us at cyber@iqt.org so we can include you in our market map.)